If you’re working in data engineering or managing complex workflows, you’ve probably heard of Apache Airflow. It’s a super handy tool that helps automate and orchestrate your data pipelines—basically, it takes the hassle out of making sure everything runs on time and in the right order. Whether it’s moving data, triggering tasks, or handling failures, Airflow can do it all.

Let’s dive into a simple, step-by-step guide on how to get started with Airflow and manage your data workflows more efficiently.

What is Apache Airflow?

Apache Airflow is an open-source tool that automates and schedules workflows, called DAGs (Directed Acyclic Graphs). These DAGs are like flowcharts, showing the order in which tasks should run. Airflow handles all the heavy lifting, so you can focus on the bigger picture.

Why Use Airflow?

1. Task Scheduling: Automate when tasks run (daily, hourly, etc.).

2. Dependency Management: Make sure tasks run in the right order.

3. Error Handling: Airflow automatically retries failed tasks based on your settings.

Step-by-Step: Creating a Workflow with Airflow

Set Up Airflow Using Docker

Rather than installing Airflow directly on your machine, you can use Docker to set it up quickly and easily. Here’s how:

1. Install Docker: If you don’t have it, grab Docker here.

2. Get the Docker Compose File: Download the Airflow setup file from this link.

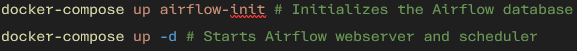

3. Start Airflow: Navigate to the directory where the file is saved and run these commands:

4. Access the UI: Head to http://localhost:8080 to access the Airflow dashboard.

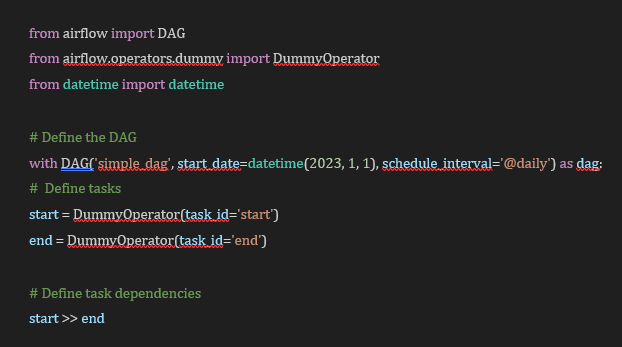

Creating Your First DAG

Once Airflow is up, it’s time to create a simple workflow. In Airflow, these workflows are defined in Python. Here’s an example DAG with two basic tasks:

Save this file in the dags/ directory you created earlier. Your DAG will now show up in the Airflow UI, ready to be scheduled.

Schedule and Monitor

Once your DAG is defined, Airflow will handle the scheduling. You can monitor your DAG using Airflow’s web interface, which gives you a visual representation of the workflow and the status of each task.

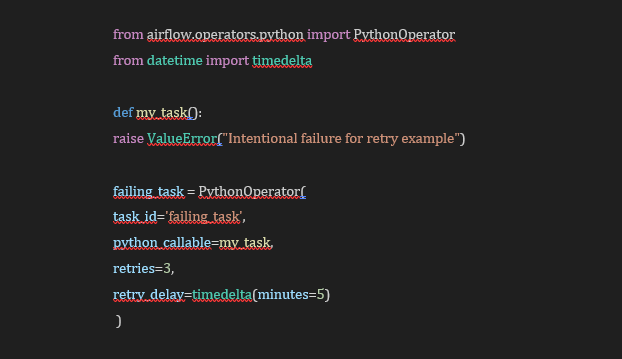

Handling Failures with Retries

Sometimes tasks fail—that’s normal. Airflow lets you define retry logic to handle this. For example, here’s how you can set up a task to retry up to three times if it fails:

This task will retry three times with a 5-minute delay between retries.

Why Use Airflow?

Airflow is a lifesaver for data engineers. It scales easily, offers flexibility through Python, and provides a clean interface for monitoring workflows in real-time. Plus, with Docker, setup is a breeze.

So, if you’re looking for a reliable way to orchestrate your data workflows, Airflow is a solid choice.

References